OSS Deployment Guide

In this guide we'll show you how to deploy an Open Source Alokai application to a production server.

This guide is for Open Source Applications. If you have Enterprise support, please refer to the Cloud Deployment Guide or see the Enterprise Onboarding Course.

Prerequisites

- A linux server with SSH Access

- A domain name and the ability to point it to your server's IP address

References

Prepare Server

Update Server

The command apt-get update is used to update the package index files on the system, which contain information about available packages and their versions.

apt-get update

Install Node.js

curl -fsSL https://deb.nodesource.com/setup_18.x | bash - &&apt-get install -y nodejs

ensure node is installed

node -v

Install yarn

npm install --global yarn

ensure yarn is installed

yarn -v

Install nginx

apt-get install nginx

Install certbot:

- Install snapd:

apt-get install snapd

- Install certbot:

snap install --classic certbot

Link certbot to your path:

ln -s /snap/bin/certbot /usr/bin/certbot

Setting up a reverse proxy

We're running two applications, one for the frontend and one for the backend. The frontend is running on port 3000 and the backend is running on port 4000. When someone visits our website, we want to serve the frontend application. When someone makes a request to our API, we want to serve the backend application. We'll use Nginx to proxy the requests to the correct application.

Configure Nginx

create an nginx config /etc/nginx/sites-enable/vsfcommunity

server {

server_name shoppernauts.com; # setup your domain here

gzip on;

gzip_types text/plain application/xml text/css application/ javascript;

gzip_min_length 1000;

location / {

# expires $expires;

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_read_timeout 1m;

proxy_connect_timeout 1m;

proxy_pass http://127.0.0.1:3000; # set the address of the frontend instance here

}

location /api {

# expires $expires;

rewrite ^/api(.*)$ $1 break; # Remove the '/api' prefix from the URI

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_read_timeout 1m;

proxy_connect_timeout 1m;

proxy_pass http://127.0.0.1:4000; # set the address of the api instance here

}

}

As you may have noticed, there is no metion of SSL in the nginx config file. We'll configure SSL now using certbot. Of course, if you already have a method of obtaining SSL certificates, you can skip this step.

Run certbot:

Certbot is a free and open source tool that will help us get a free SSL certificate from Let's Encrypt. Not only is this certificate free, but it's also trusted by all major browsers. Cerbot will even automatically renew the certificate for us when it's about to expire.

Follow the prompts to configure certbot. I recommend selecting the option to redirect all HTTP traffic to HTTPS.

certbot --nginx

when certbot is done, your nginx config file will look something like this:

server {

server_name shoppernauts.com; # setup your domain here

gzip on;

gzip_types text/plain application/xml text/css application/ javascript;

gzip_min_length 1000;

location / {

# expires $expires;

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_read_timeout 1m;

proxy_connect_timeout 1m;

proxy_pass http://127.0.0.1:3000; # set the address of your frontend instance here

}

location /api {

# expires $expires;

rewrite ^/api(.*)$ $1 break; # Remove the '/api' prefix from the URI

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_read_timeout 1m;

proxy_connect_timeout 1m;

proxy_pass http://127.0.0.1:4000; # set the address of api instance here

}

listen 443 ssl; # managed by Certbot

ssl_certificate /etc/letsencrypt/live/shoppernauts.com/fullchain.pem; # managed by Certbot

ssl_certificate_key /etc/letsencrypt/live/shoppernauts.com/privkey.pem; # managed by Certbot

include /etc/letsencrypt/options-ssl-nginx.conf; # managed by Certbot

ssl_dhparam /etc/letsencrypt/ssl-dhparams.pem; # managed by Certbot

}

server {

if ($host = shoppernauts.com) {

return 301 https://$host$request_uri;

} # managed by Certbot

listen 80;

server_name shoppernauts.com;

return 404; # managed by Certbot

}

Setting up Services

Overview

systemctl is a command-line tool that allows for the management and monitoring of the systemd system and service manager. It is used to start, stop, and restart services, as well as to configure services to automatically start when the system boots. We'll use it to start our frontend and backend applications. To do this, we'll first create a service for each application.

Create a service for the frontend

Create a file called vsfcommunity-web.service in /etc/systemd/system/ and paste the following contents into it:

[Unit]

Description=vsfcommunity-web service

Documentation=https://vsfcommunity.dev

After=network.target

[Service]

Restart=always

RestartSec=10

TimeoutSec=300

WorkingDirectory=/var/www/html/live/apps/web

ExecStart=/usr/bin/bash -c 'yarn start'

[Install]

WantedBy=multi-user.target

# /etc/systemd/system/vsfcommunity-web.service

Create a service for the API

Create a file called vsfcommunity-server.service in /etc/systemd/system/ and paste the following contents into it:

[Unit]

Description=vsfcommunity-server service

Documentation=https://vsfcommunity.dev

After=network.target

[Service]

Restart=always

RestartSec=10

TimeoutSec=300

WorkingDirectory=/var/www/html/live/apps/server

ExecStart=/usr/bin/bash -c 'yarn start'

[Install]

WantedBy=multi-user.target

# /etc/systemd/system/vsfcommunity-server.service

If you're new to systemd, here's a quick overview of how to use it:

To enable a service:

systemctl enable vsfcommunity-web

To start a service:

systemctl start vsfcommunity-web

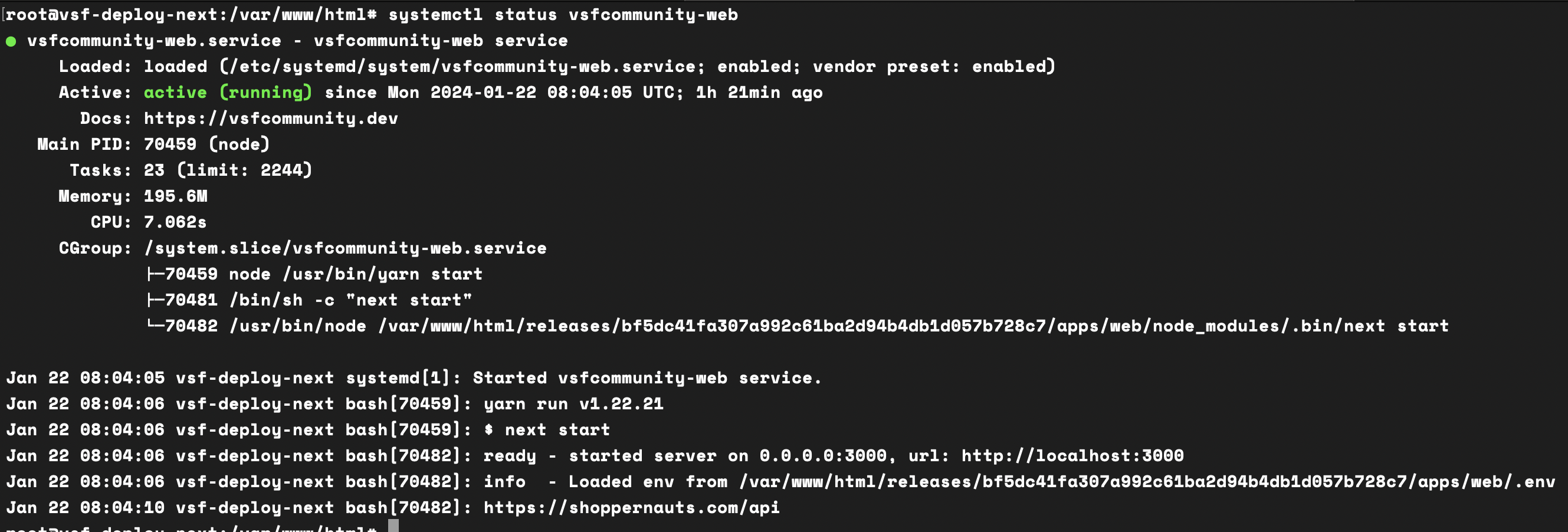

To check the status of a service:

systemctl status vsfcommunity-web

Checking the status will give you information about the service, including whether it's running or not.

To stop a service:

systemctl stop vsfcommunity-web

Enable the services

In a subsequent step, we will create a deployment script that will automatically start the services. However, we'll need to enable the services before they be run. To do this, run the following commands:

systemctl enable vsfcommunity-web

systemctl enable vsfcommunity-server